Course Design By

Nasscom & Wipro

You will get to master all the basics of Big Data Hadoop, YARN, Map Reduce, and become an expert in writing apps using the mentioned tools.

Our Big Data Hadoop online training institute in India inculcates deep knowledge of HDFS, Sqoop, Pig, Hive, Oozie, shell scripting, Spark, Flume, Zookeeper online.

With the leading Big data Hadoop online training in India, you will acquire a clear understanding of the Big Data Hadoop cluster and learn Big Data Hadoop analytics as well.

Learn to create various ETL tools and know about set the pseudo nodes too.

With the Big data Hadoop placement training online, get hands-on experience through our real-life projects and assignments.

Big Data Hadoop Analyst - $110K

Big Data Hadoop Administrator - $125K

Big Data Hadoop Developers - $135K

Big Data Hadoop Architect - $170K

Big Data Hadoop Analytics is the most desirable data analytic tool.

It enhances the efficiency of an organization.

Big Data Hadoop Analytic tools are utilized to get better insights into their sales and marketing facilities.

Big data Hadoop is boosting business processes by marketing it on the social media platform.

The requirement of certified Big data Hadoop professionals is high as there are very few skilled analytical professionals available in the market.

Backed by our experts and real-time sessions, the Big Data Hadoop training course offers a comprehensive knowledge of Big Data Hadoop, Big Data Hadoop certifications, and the current market trends in the relevant field.

Our course curriculum is the perfect blend of theoretical as well as practical components.

Big Data Hadoop online training session delivers Big Data framework, Storage & Processing, Sqoop, Pig, Hive, Oozie, Shell scripting, Spark detailed sessions in a practical way so that the learners can easily grasp the subject. It also offers live sessions, provides study material, PPT, projects, etc.

Upon completing the Big Data Hadoop certification training program, you will directly qualify for the next level certification and thereafter establish as a Big Data Hadoop expert.

Get proficient in conducting database modeling and development, data mining, and warehousing.

Should be able to develop, implement and maintain Big Data solutions.

Should be an expert in designing and deploying data platforms across multiple domains ensuring operability.

Must have hands-on expertise in transforming data for meaningful analyses, improving data efficiency, reliability & quality, creating data enrichment, building high performance, and ensuring data integrity.

Must have sufficient experience working with the Big Data Hadoop ecosystem (HDFS, MapReduce, Oozie, Hive, Impala, Spark, Kerberos, KAFKA, etc.).

Must know all about Spark core, HBase or Cassandra, Pig, Yarn, SQL, MongoDB, RDBMS, DW/DM, etc.

Need to play a crucial role in the development and deployment of innovative big data platforms for advanced analytics and data processing.

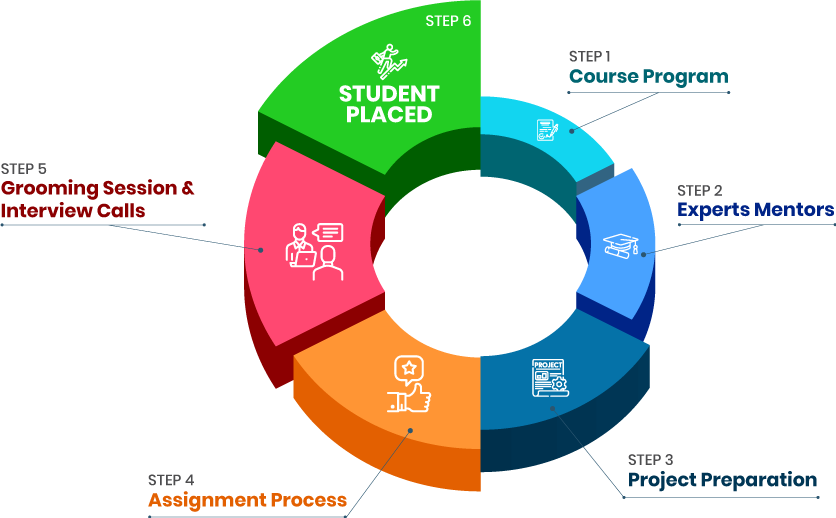

we train you to get hired.

By registering here, I agree to Croma Campus Terms & Conditions and Privacy Policy

+ More Lessons

Course Design By

Nasscom & Wipro

Course Offered By

Croma Campus

Stories

success

inspiration

career upgrade

career upgrade

career upgrade

career upgrade

You will get certificate after

completion of program

You will get certificate after

completion of program

You will get certificate after

completion of program

in Collaboration with

Empowering Learning Through Real Experiences and Innovation

we train you to get hired.

Phone (For Voice Call):

+91-971 152 6942WhatsApp (For Call & Chat):

+91-971 152 6942Get a peek through the entire curriculum designed that ensures Placement Guidance

Course Design By

Course Offered By

Ready to streamline Your Process? Submit Your batch request today!

Croma Campus is one of the excellent Big Data Hadoop Online Training Institute in India that offers hands-on practical knowledge, practical implementation on live projects and will ensure the job with the help of Big Data Hadoop Online course, Croma Campus provides Big Data Hadoop Online Training by industrial experts, they have 8+ years working experience in top organization.

Croma Campus associated with top organizations like HCL, Wipro, Dell, BirlaSoft, Tech Mahindra, TCS, IBM, etc. make us capable to place our students in top MNCs across the globe. Our training curriculum is approved by our placement partners.

Croma Campus in India mentored more than 3000+ candidates with Big Data Hadoop Online Certification Training in India at a very reasonable fee. The course curriculum is customized as per the requirement of candidates/corporates. You will get study material in the form of E-Books, Online Videos, Certification Handbooks, Certification and 500 Interview Questions along with Project Source material.

For details information & FREE demo class, call us at 120-4155255, +91-9711526942 or write to us info@cromacampus.com

Address: – G-21, Sector-03, Noida (201301)

Highest Salary Offered

Average Salary Hike

Placed in MNC’s

Year’s in Training

fast-tracked into managerial careers.

Get inspired by their progress in the

Career Growth Report.

FOR QUERIES, FEEDBACK OR ASSISTANCE

Best of support with us

For Voice Call

+91-971 152 6942For Whatsapp Call & Chat

+91-9711526942