Course Design By

Nasscom & Wipro

Learn how to build and manage data pipelines using tools like Apache Spark and Hadoop.

Get hands-on experience with cloud platforms (AWS, Azure) to handle big data.

Understand the ETL (Extract, Transform, Load) process to clean and organize data.

Learn about data storage methods like data lakes and data warehouses.

Become skilled in using programming languages like Python and SQL to manipulate data.

Entry-level salary: 5 LPA to 8 LPA.

Salaries increase with experience and knowledge of big data tools and cloud platforms.

Senior-level data engineers can earn 15 LPA or more as they gain more expertise.

Junior Data Engineer Senior Data Engineer Data Architect.

With experience, you can take on leadership roles, such as Data Engineering Manager.

You can also explore roles like Data Scientist or Lead Data Engineer.

Gurgaon is home to many tech companies looking for skilled data engineers.

Data engineering is a rapidly growing field with high job demand.

The course is designed to teach the latest data tools and technologies.

The training includes practical experience that prepares students for real-world challenges.

Develop and maintain data pipelines to collect and process data.

Manage data storage systems like data lakes and data warehouses.

Ensure the data is of high quality, secure, and available for analysis.

Work closely with data scientists to ensure they have clean, well-organized data.

Optimize how data is stored and processed to improve speed and efficiency.

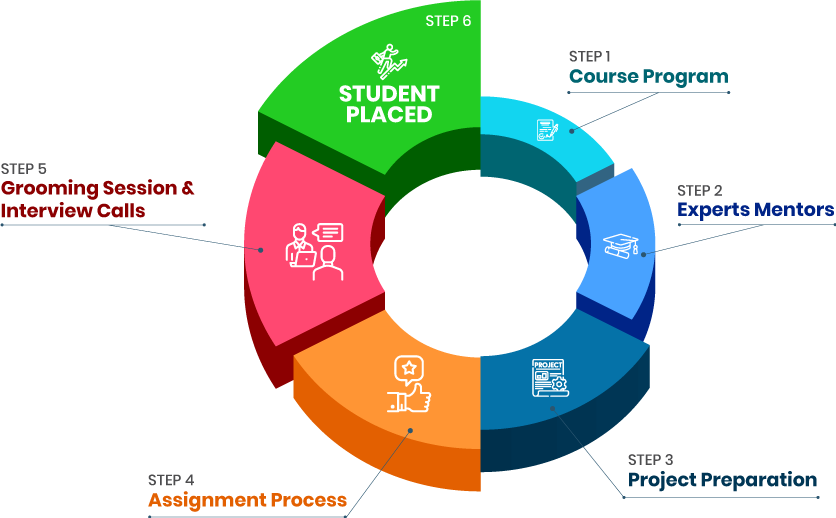

Dedicated placement support to assist with job applications and interview preparation.

Opportunities for internships or live projects to enhance your skills.

Mock interviews and resume-building sessions to improve your chances of getting hired.

we train you to get hired.

By registering here, I agree to Croma Campus Terms & Conditions and Privacy Policy

+ More Lessons

Course Design By

Nasscom & Wipro

Course Offered By

Croma Campus

Stories

success

inspiration

career upgrade

career upgrade

career upgrade

career upgrade

You will get certificate after

completion of program

You will get certificate after

completion of program

You will get certificate after

completion of program

in Collaboration with

Empowering Learning Through Real Experiences and Innovation

we train you to get hired.

Phone (For Voice Call):

+91-971 152 6942WhatsApp (For Call & Chat):

+91-971 152 6942Get a peek through the entire curriculum designed that ensures Placement Guidance

Course Design By

Course Offered By

Ready to streamline Your Process? Submit Your batch request today!

Having a basic understanding of programming (like Python or SQL) can help, but it’s not mandatory. The Data Engineering Course in Gurgaon is designed for beginners.

The program usually lasts from 3 to 6 months, depending on the institute and course format.

The placement depends on your skills and the job market, but the course offers placement assistance, and most students find opportunities within a few months.

You will learn Python, SQL, Hadoop, Apache Spark, AWS, and other cloud technologies used in data engineering.

Yes, the certification from the training program, combined with official certifications from platforms like AWS or Azure, will help make your resume stand out to employers.

Highest Salary Offered

Average Salary Hike

Placed in MNC’s

Year’s in Training

fast-tracked into managerial careers.

Get inspired by their progress in the

Career Growth Report.

FOR QUERIES, FEEDBACK OR ASSISTANCE

Best of support with us

For Voice Call

+91-971 152 6942For Whatsapp Call & Chat

+91-9711526942