Course Design By

Nasscom & Wipro

Data processing fundamentals

Data storage fundamentals

Mapping storage systems

Data modeling

Streaming data

Dataflow pipelining

How to operationalize data processing systems

Basic computer knowledge

Basic knowledge of data processing systems

Passion for learning

Basic knowledge about GCP Certification.

GCP newbies

Aspiring Google Cloud Data Engineers

Engineering graduates

Google Cloud experts who wish to train for other roles

Students who want to clear the Google Cloud Data Engineer exam

Provide quality knowledge about data processing and data processing systems

Make students expert in data modeling

Help students learn how to stream data

Make students familiar with the duties of a Google Cloud Data Engineer

Make students proficient in dataflow pipelining

Prepare students so that they are able to clear the Google Cloud Data Engineer exam without any difficulty

Make students expert in operationalizing ML models

Become comfortable in the data processing

Understand how to develop data processing systems

Understand how to stream data

Master key concepts of data modeling

Become a competent Google Cloud Data Engineer

Understand the responsibilities of a Cloud Data Engineer

Become an expert in operationalizing data systems

Become an expert in operationalizing ML models

Google Cloud Data Engineer

Train for roles like data architect, data solutions architect, etc.

Google Cloud professional

Google Cloud trainer

Contributor to the community

Mentor team members

Operationalizes data processing systems

Operationalizes ML models

Helps company in making data-driven decisions

Collect datasets that fulfill the requirements of the business

Design algorithms for transforming data into helpful and actionable information

Design database pipeline architecture

Create data validation methods

Operationalize data processing systems

Operationalize machine learning models

Mentor team members

Virtusa

CloudThat Technologies Pvt. Ltd.

Accenture

Code Clouds

VMware

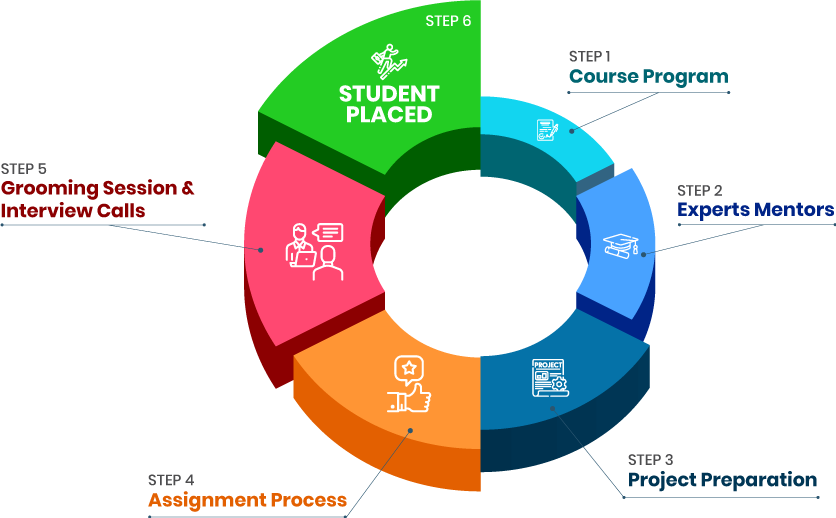

100% placement support

Interview grooming sessions

Resume preparation

Access to the job portal of Croma Campus

Industry-recognized certification

Hefty remuneration

Help you show your skills as a Google Cloud Data engineer

Hike in salary

Lots of job opportunities

we train you to get hired.

By registering here, I agree to Croma Campus Terms & Conditions and Privacy Policy

+ More Lessons

Course Design By

Nasscom & Wipro

Course Offered By

Croma Campus

Stories

success

inspiration

career upgrad

career upgrad

career upgrad

career upgrad

12-Jul-2025*

07-Jul-2025*

09-Jul-2025*

12-Jul-2025*

07-Jul-2025*

09-Jul-2025*

You will get certificate after

completion of program

You will get certificate after

completion of program

You will get certificate after

completion of program

in Collaboration with

Empowering Learning Through Real Experiences and Innovation

we train you to get hired.

Phone (For Voice Call):

+91-971 152 6942WhatsApp (For Call & Chat):

+91-971 152 6942Get a peek through the entire curriculum designed that ensures Placement Guidance

Course Design By

Course Offered By

Ready to streamline Your Process? Submit Your batch request today!

The Google Cloud-professional Data Engineer training program can be completed in 35-45 days

You will learn from a skilled Google Cloud Data Engineer

You can earn around ₹14,00,000 – ₹23,00,00 PA after completing the Google Cloud-Professional Data Engineer training program

FOR QUERIES, FEEDBACK OR ASSISTANCE

Best of support with us

For Voice Call

+91-971 152 6942For Whatsapp Call & Chat

+91-9711526942