Course Design By

Nasscom & Wipro

+ More Lessons

Course Design By

Nasscom & Wipro

Course Offered By

Croma Campus

Stories

success

inspiration

career upgrad

career upgrad

career upgrad

career upgrad

05-Jul-2025*

07-Jul-2025*

02-Jul-2025*

05-Jul-2025*

07-Jul-2025*

02-Jul-2025*

You will get certificate after

completion of program

You will get certificate after

completion of program

You will get certificate after

completion of program

in Collaboration with

Empowering Learning Through Real Experiences and Innovation

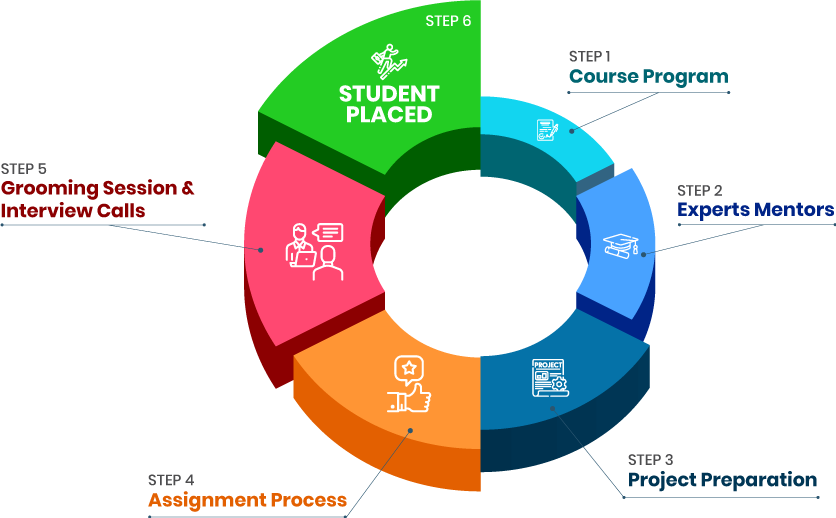

we train you to get hired.

Phone (For Voice Call):

+91-971 152 6942WhatsApp (For Call & Chat):

+91-971 152 6942Get a peek through the entire curriculum designed that ensures Placement Guidance

Course Design By

Course Offered By

Ready to streamline Your Process? Submit Your batch request today!

It basically counts the words in each document (map phase), while in the reduce phase it accumulates the data as per the document spanning the entire gathering. Eventually, during the map phase, the input data is divided into splits for analysis by map tasks executing in parallel across the Hadoop framework.

NameNode in Hadoop refers to the node where Hadoop stores all the file location information in HDFS (Hadoop Distributed File System) securely. If you want to acquire its detailed analysis, then you should get started with its professional course.

Yes, it's pretty much in demand, and in the coming years as well, it will remain consistently in use. So, if you possess your interest in this line, then you should acquire its accreditation, and make a career out of it.

Yes, we will provide you with the study material. In fact, you can also use our LMS portal and get extra notes, and class recordings as well.

FOR QUERIES, FEEDBACK OR ASSISTANCE

Best of support with us

For Voice Call

+91-971 152 6942For Whatsapp Call & Chat

+91-9711526942