Course Design By

Nasscom & Wipro

Right at the beginning of the course, our trainers will help you know its basic fundamentals.

You will also receive sessions concerning how to start working with real-life industry use cases.

You will get the chance to analyze Hadoop features like HDFS, YARN, Hive, MapReduce, Pig, Spark, HBase, Sqoop, Flume, Oozie, Hive, etc.

You will also get the chance to choose from roles like Developer, Administrator, Data Analyst, Tester, and Solution Architect.

In fact, you will end up passing the certifications ensures deep learning of various big data concepts.

Right at the beginning of your career, you will earn around Rs. 3.6 Lakh, which is quite good for freshers.

On the other hand, an experienced Big Data Hadoop Developer earns Rs. 11.5 Lakh annually.

Further, by acquiring more work experience along with the latest skills, your salary structure will expand.

By taking projects as a freelancer, you will make some good additional income also.

By opting for its legit training from a reputed educational foundation, you will turn into a knowledgeable Big Data Hadoop Developer.

Well, withholding a proper accreditation of Big Data Hadoop Developer, you will be offered an excellent salary package right from the beginning of your career.

Knowing each side of Big Data Hadoop Developer will also push you forward to come up with innovative applications.

Knowing this skill will eventually enhance your resume.

You will always have numerous jobs offers in hand.

Your foremost duty will be to meet with the development team to assess the organization’s big data infrastructure.

You will also have to design and code Hadoop applications to examine data collections.

Creating data processing frameworks, extracting data and isolating data clusters will also be counted as your main responsibility.

You will also have to do the testing scripts and analyzing results.

By getting started with this specific course, you will end up strengthening your base knowledge.

You will know the various features and offerings of this technology by getting in touch with a well-established Big Data Hadoop Training Institute in Gurgaon.

You will also know about building a new sort of application and implying some latest features.

You will end up obtaining some untold, and hidden facts from the Big Data Hadoop Training in Gurgaon respectively.

UST, Octro.com, Impetus, Crisp Analytics, etc. are some of the well-known companies hiring skilled candidates.

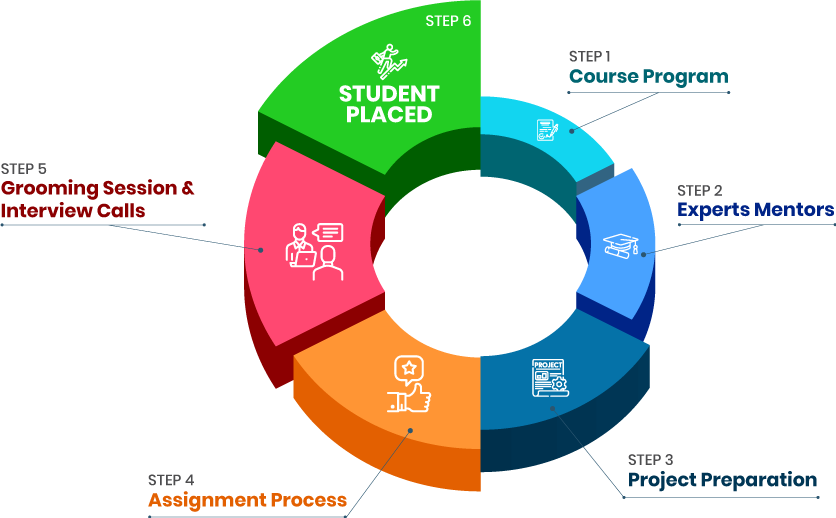

By joining Croma Campus, you will get the opportunity to get placed in your choice of companies post enrolling with the Big Data Hadoop course.

Our trainers will also help you in building an impressive resume.

They will also suggest you some effective tips to pass the interviews.

we train you to get hired.

By registering here, I agree to Croma Campus Terms & Conditions and Privacy Policy

+ More Lessons

Course Design By

Nasscom & Wipro

Course Offered By

Croma Campus

Stories

success

inspiration

career upgrad

career upgrad

career upgrad

career upgrad

05-Jul-2025*

07-Jul-2025*

09-Jul-2025*

05-Jul-2025*

07-Jul-2025*

09-Jul-2025*

You will get certificate after

completion of program

You will get certificate after

completion of program

You will get certificate after

completion of program

in Collaboration with

Empowering Learning Through Real Experiences and Innovation

we train you to get hired.

Phone (For Voice Call):

+91-971 152 6942WhatsApp (For Call & Chat):

+91-971 152 6942Get a peek through the entire curriculum designed that ensures Placement Guidance

Course Design By

Course Offered By

Ready to streamline Your Process? Submit Your batch request today!

Our strong associations with top organizations like HCL, Wipro, Dell, Birlasoft, TechMahindra, TCS, IBM etc. makes us capable to place our students in top MNCs across the globe. 100 % free personality development classes which includes Spoken English, Group Discussions, Mock Job interviews & Presentation skills.

The need of It professionals are increasing so Big data hadoop is one of the better choice for career growth and enough income. Apache Hadoop provides you the better package.

Join Croma Campus and complete your training with free demo class provided by the institute before joining.

Industry standard projects like Executive Summary, Algorithm Marketplaces, Edge analytics are included in our training programs and Live Project based training with trainers having 5 to 15 years of Industry Experience.

For details information & FREE demo class call us on +91-9711526942 or write to us info@cromacampus.com

Address: - G-21, Sector-03, Gurgaon (201301)

FOR QUERIES, FEEDBACK OR ASSISTANCE

Best of support with us

For Voice Call

+91-971 152 6942For Whatsapp Call & Chat

+91-9711526942