Course Design By

Nasscom & Wipro

+ More Lessons

Course Design By

Nasscom & Wipro

Course Offered By

Croma Campus

Stories

success

inspiration

career upgrad

career upgrad

career upgrad

career upgrad

05-Jul-2025*

07-Jul-2025*

02-Jul-2025*

05-Jul-2025*

07-Jul-2025*

02-Jul-2025*

You will get certificate after

completion of program

You will get certificate after

completion of program

You will get certificate after

completion of program

in Collaboration with

Empowering Learning Through Real Experiences and Innovation

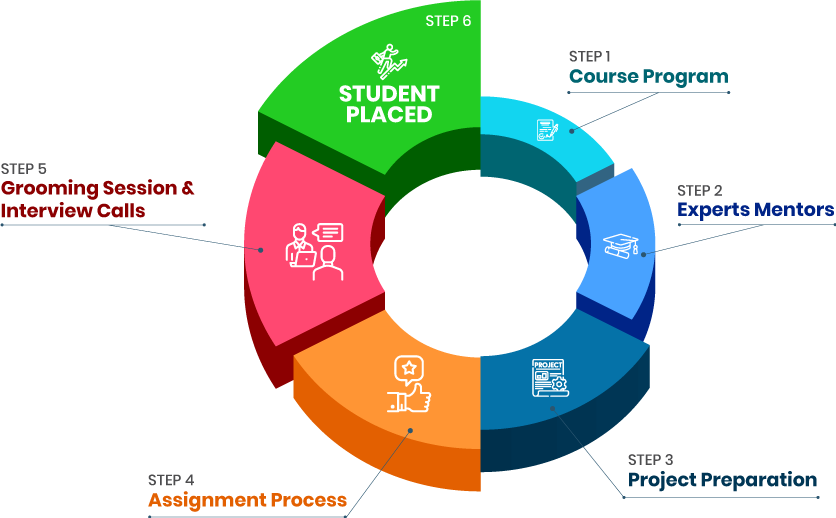

we train you to get hired.

Phone (For Voice Call):

+91-971 152 6942WhatsApp (For Call & Chat):

+91-971 152 6942Get a peek through the entire curriculum designed that ensures Placement Guidance

Course Design By

Course Offered By

Ready to streamline Your Process? Submit Your batch request today!

Pre-course reading will be provided so that you are familiar with the content before the class begins.

Yes. Visit our payment plans website to discover more about the payment choices available at Croma Campus.

You will be able to register for our next training session, as we only offer two to three each year.

Yes, there are a variety of payment options.

FOR QUERIES, FEEDBACK OR ASSISTANCE

Best of support with us

For Voice Call

+91-971 152 6942For Whatsapp Call & Chat

+91-9711526942