Course Design By

Nasscom & Wipro

Learn the basics of data engineering and how data flows within an organization.

Master key technologies like SQL, Hadoop, Spark, and cloud platforms (AWS, Azure).

Understand ETL processes, data warehousing, and data pipeline architecture.

Gain hands-on experience with real-time data processing and management.

Explore techniques for optimizing data storage, processing, and retrieval.

Entry-level data engineers: 4.5 - 7 LPA.

The salary may increase significantly with experience and expertise.

Professionals can earn higher salaries with proficiency in big data tools and cloud technologies.

Junior Data Engineer Senior Data Engineer Data Architect.

With expertise, you can take leadership roles like Data Engineering Manager or Director of Data Engineering.

Continuous learning and certifications will lead to better job opportunities and higher salaries.

Data engineering is a high-demand, future-proof career.

The course provides in-depth knowledge of industry-leading tools and technologies.

Delhi is a tech hub with numerous job opportunities for data engineers.

The course offers hands-on training with real-world projects.

Building and maintaining data pipelines.

Managing databases and cloud infrastructure.

Ensuring data quality and availability for analytics teams.

Collaborating with data scientists to ensure effective data use.

Optimizing data storage and processing systems.

Information Technology (IT): Companies developing software solutions.

Finance & Banking: To analyze and manage large amounts of transactional data.

E-commerce: Handling user data for analytics and business insights.

Healthcare: Managing patient data for research and decision-making.

Telecommunications: Analyzing data from customer networks and services.

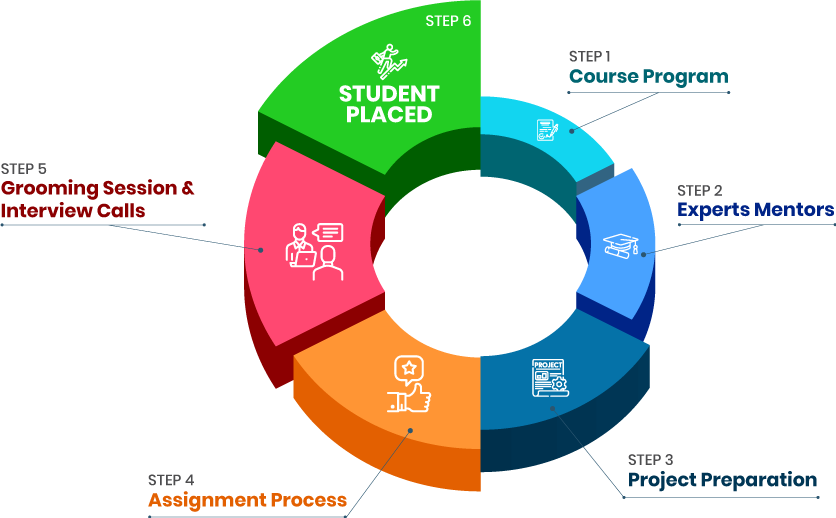

Resume-building workshops and interview preparation sessions.

Collaboration with top tech companies for placements.

Dedicated placement cell to guide students through job applications.

we train you to get hired.

By registering here, I agree to Croma Campus Terms & Conditions and Privacy Policy

+ More Lessons

Course Design By

Nasscom & Wipro

Course Offered By

Croma Campus

Stories

success

inspiration

career upgrade

career upgrade

career upgrade

career upgrade

You will get certificate after

completion of program

You will get certificate after

completion of program

You will get certificate after

completion of program

in Collaboration with

Empowering Learning Through Real Experiences and Innovation

we train you to get hired.

Phone (For Voice Call):

+91-971 152 6942WhatsApp (For Call & Chat):

+91-971 152 6942Get a peek through the entire curriculum designed that ensures Placement Guidance

Course Design By

Course Offered By

Ready to streamline Your Process? Submit Your batch request today!

Basic knowledge of programming languages like Python and SQL is recommended.

With hands-on training and certifications, students have a high chance of securing a job shortly after completing the course.

The duration of the course typically ranges from 3 to 6 months, depending on the program.

Yes, Data Engineering Course in Delhi includes comprehensive training in cloud platforms (AWS, Azure) and big data technologies like Hadoop and Spark.

Some institutes may offer a satisfaction guarantee or refund policy. It’s best to confirm this with the Data Engineer Training in Delhi provider.

Highest Salary Offered

Average Salary Hike

Placed in MNC’s

Year’s in Training

fast-tracked into managerial careers.

Get inspired by their progress in the

Career Growth Report.

FOR QUERIES, FEEDBACK OR ASSISTANCE

Best of support with us

For Voice Call

+91-971 152 6942For Whatsapp Call & Chat

+91-9711526942